Introduction

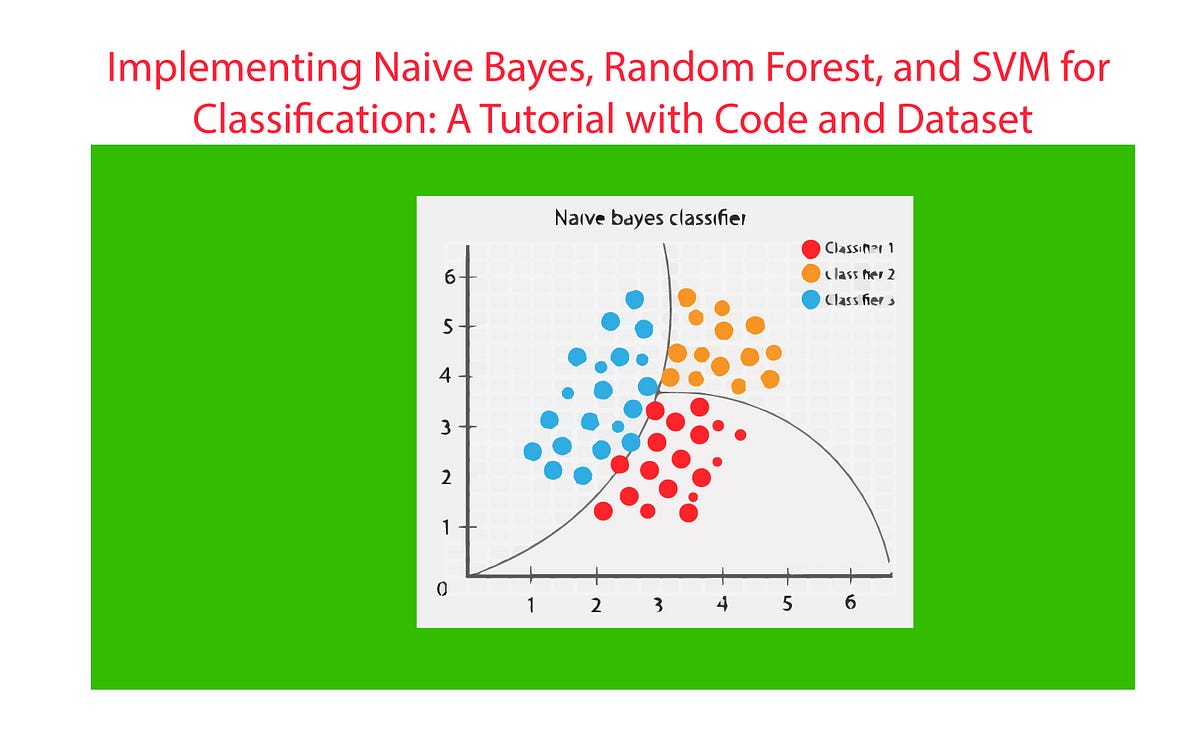

Naive Bayes Model in Artificial Intelligence Man-made reasoning (man-made intelligence) is a wide field that includes different models and strategies to empower machines to imitate human knowledge. Among these procedures, the Credulous Bayes model stands apart as a straightforward yet strong probabilistic classifier utilized in various applications. In this article, we will investigate the basics of the Gullible Bayes model, its hidden standards, and its functional applications in computerized reasoning.

What is the Innocent Bayes Model?

The Innocent Bayes model is a probabilistic classifier in light of Bayes’ Hypothesis, which depicts the likelihood of an occasion given earlier information on conditions connected with the occasion. The model is called “gullible” because it makes working on suspicion: it expects that the elements in a dataset are free of one another given the class mark. Notwithstanding this presumption frequently being unreasonable in certifiable situations, the model performs shockingly well practically speaking.

Bayes’ Hypothesis

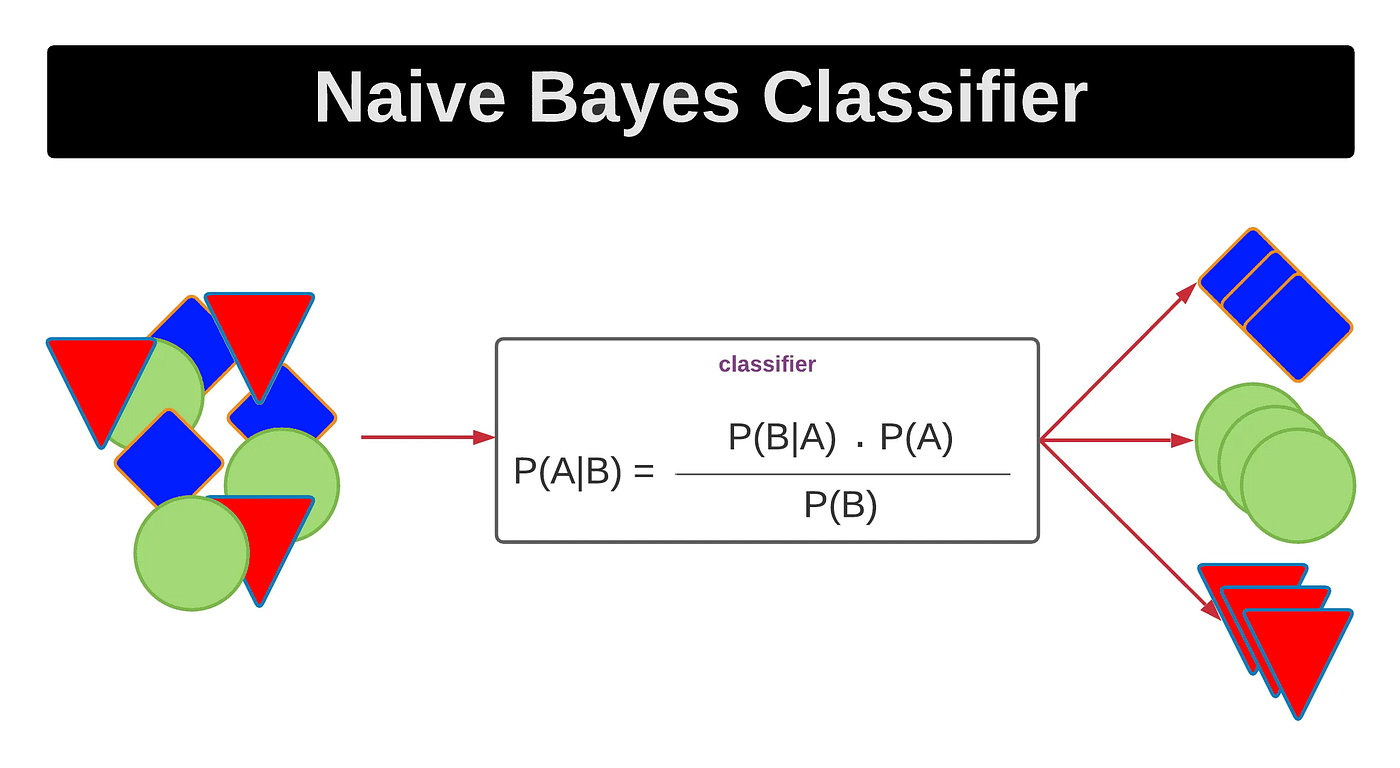

Bayes’ Hypothesis is the underpinning of the Gullible Bayes model and is communicated as:

[ P(A|B) = \frac{P(B|A) \cdot P(A)}{P(B)} ]

where:

- ( P(A|B) ) is the back likelihood of occasion A happening given that occasion B is valid.

- ( P(B|A) ) is the probability of occasion B happening given that occasion An is valid.

- ( P(A) ) is the earlier likelihood of occasion A.

- ( P(B) ) is the earlier likelihood of occasion B.

The Gullible Bayes model applies this hypothesis to work out the back likelihood of each class given the component upsides of an example and afterward chooses the class with the most noteworthy back likelihood.

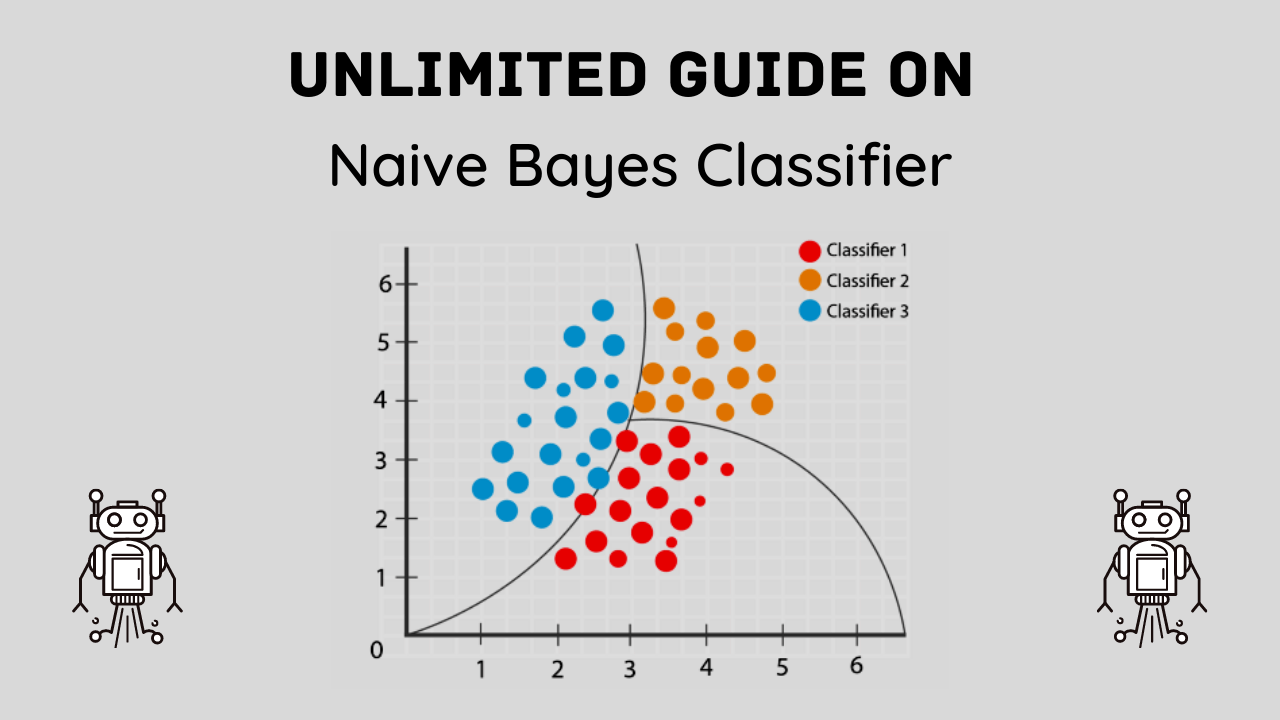

Kinds of Credulous Bayes Classifiers

There are a few kinds of Credulous Bayes classifiers, each reasonable for various sorts of information:

- Gaussian Gullible Bayes: Expect that the constant highlights follow a typical (Gaussian) dissemination. It is especially valuable when the elements are ordinarily disseminated.

- Multinomial Credulous Bayes: Utilized for discrete information and is generally applied in text grouping issues where word frequencies or term frequencies are utilized as highlights.

- Bernoulli Guileless Bayes: Reasonable for parallel/boolean highlights. It is frequently utilized for text characterization errands where the presence or nonattendance of a word in a report is thought of.

Utilizations of the Innocent Bayes Model

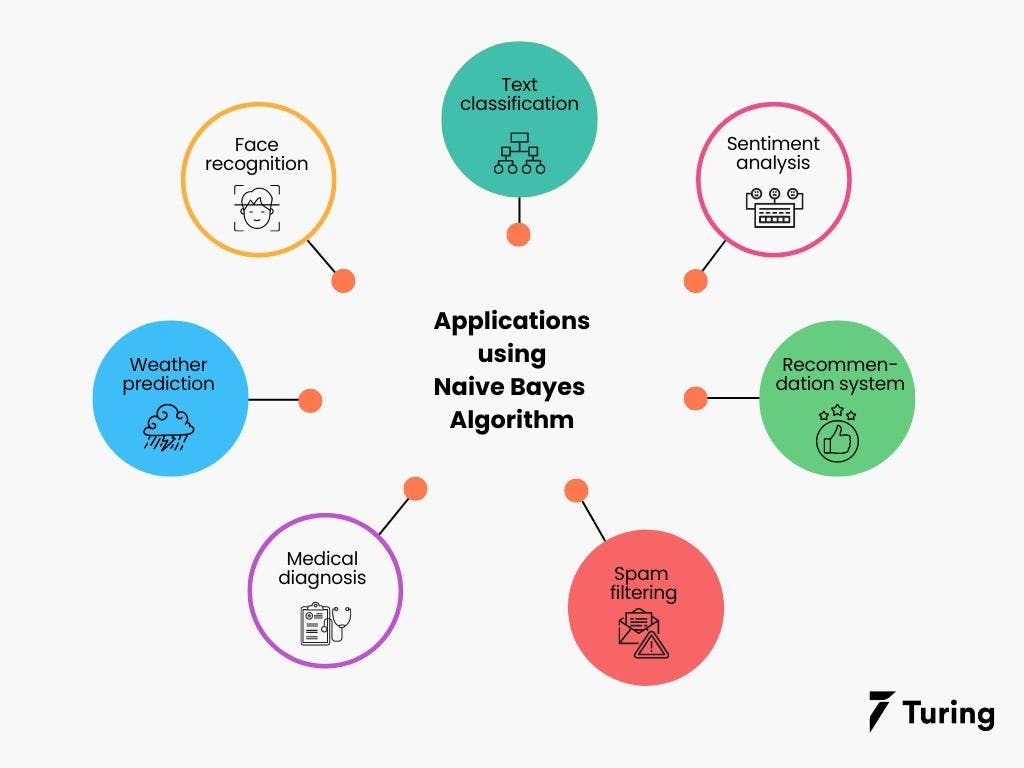

The Credulous Bayes model is broadly utilized in different areas of man-made brainpower because of its effortlessness, proficiency, and viability. A few normal applications include:

- Text Classification: Naive Bayes Model in Artificial Intelligence Guileless Bayes classifiers are broadly utilized in regular language handling (NLP) undertakings like spam identification, opinion examination, and record order. For example, email spam channels utilize the Guileless Bayes model to arrange approaching messages as spam or non-spam in light of the event of specific catchphrases.

- Medical Diagnosis: In the medical care area, the Guileless Bayes model helps with diagnosing illnesses by breaking down tolerant side effects and clinical trial results. By utilizing verifiable clinical information, the model can foresee the probability of a patient having a specific sickness.

- Recommendation Systems: Guileless Bayes classifiers are utilized in proposal frameworks to anticipate client inclinations and recommend pertinent things. For instance, internet business stages utilize this model to suggest items in light of clients’ past buys and perusing conduct.

- Image Recognition: The model can likewise be applied in picture acknowledgment undertakings to characterize pictures in light of their elements. Albeit more refined models like convolutional brain organizations (CNNs) are regularly utilized for this reason, Innocent Bayes can be a decent beginning stage for straightforward picture grouping undertakings.

Benefits and Impediments

The Gullible Bayes model offers a few benefits:

- Simplicity: The model is straightforward and executed, making it available for amateurs in AI and artificial intelligence.

- Efficiency: It is computationally productive, requiring less preparation time contrasted with additional mind-boggling models.

- Performance: Despite its straightforwardness, the Guileless Bayes model frequently performs well, particularly in text-order undertakings.

Be that as it may, the model additionally has a few impediments:

- Autonomy Assumption: The supposition of component freedom is frequently ridiculous, which can influence the model’s exhibition when the highlights are firmly corresponded.

- Zero Likelihood Problem: If element esteem that is absent in the preparation dataset happens in the test dataset, the model relegates a no likelihood to that component, prompting erroneous forecasts. This issue can be alleviated utilizing methods like Laplace smoothing.

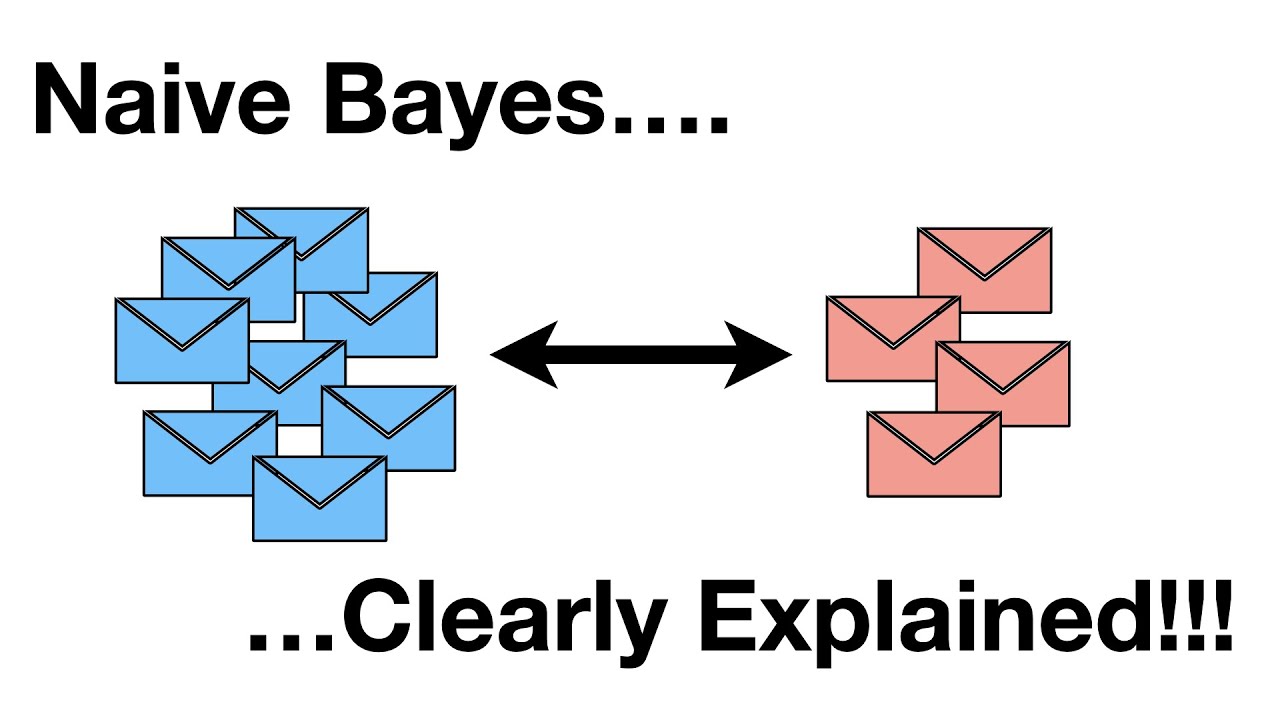

Genuine Model: Email Spam Discovery

To delineate the force of the Guileless Bayes model, how about we dig into its application in email spam locations? Email specialist organizations use Innocent Bayes classifiers to sift through spam messages by breaking down the substance of approaching messages. The model is prepared on a dataset of marked messages, where each email is labeled by the same token “spam” or “not spam.” By looking at the recurrence of explicit words and expressions in these messages, the Gullible Bayes model can learn designs related to spam messages. At the point when another email shows up, the model works out the likelihood of it being spam given its substance, and banners it appropriately. This interaction helps keep clients’ inboxes liberated from undesirable and possibly destructive messages.

Upgrading Guileless Bayes with Component Designing

While the Gullible Bayes model is clear, its presentation can be altogether further developed through highlight designing. This cycle includes changing crude information into significant elements that better address the basic examples in the information. For example, in message arrangement assignments, highlights can incorporate the presence or nonattendance of specific watchwords. Word frequencies, or significantly more complex portrayals like term recurrence backward record recurrence (TF-IDF). By cautiously choosing and designing elements, we can moderate the effect of the model’s freedom presumption and improve its exactness and vigor.

Joining Credulous Bayes with Different Methods

One more method for supporting the exhibition of the Innocent Bayes model is by joining it with other AI strategies. Gathering techniques, like sacking and supporting, can be utilized to make a more powerful classifier. For instance, in a packing approach, various cases of the Gullible Bayes model are prepared on various subsets of the information, and their expectations are collected to shape the last result. This method lessens change and further develops speculation. Naive Bayes Model in Artificial Intelligence Additionally, supporting procedures like AdaBoost can be utilized to iteratively work on the model by zeroing in on misclassified examples, consequently expanding its general precision.

Managing Imbalanced Datasets

In some genuine applications, datasets are much of the time imbalanced, implying that the quantity of occurrences in a single class fundamentally dwarfs those in another. This awkwardness can adversely influence the presentation of the Gullible Bayes model. As it might become one-sided towards the larger part class. To resolve this issue, strategies, for example, oversampling the minority class, and undersampling the greater part class. Or utilizing engineered information age techniques like Destroyed (Manufactured Minority Over-testing Procedure) can be applied. These techniques help balance the dataset. Permitting the Innocent Bayes model to learn all the more actually and make more exact expectations.

Assessing the Guileless Bayes Model

Assessing the exhibition of the Credulous Bayes model is critical to guarantee its viability in a given application. Normal assessment measurements incorporate exactness, accuracy, review, and the F1 score. Exactness estimates the general rightness of the model, while accuracy and review give bits of knowledge into its exhibition on individual classes. Naive Bayes Model in Artificial Intelligence The F1 score, which is the consonant mean of accuracy and review, offers a reasonable evaluation of the model’s presentation. Particularly in situations with imbalanced datasets. Cross-approval strategies, for example, k-crease cross-approval, can likewise be utilized to survey the model’s speculation capacity and forestall overfitting.

Viable Difficulties and Contemplations

Regardless of its many benefits, sending the Gullible Bayes model in genuine applications accompanies its arrangement of difficulties. Taking care of missing information, including scaling, and managing boisterous information is a portion of the pragmatic issues that should be tended to. Furthermore, the model’s exhibition might fluctuate depending on the idea of the information and the particular main concern. In this way, directing careful trial and error and tuning to accomplish ideal results is fundamental. At times, more modern models like choice trees, support vector machines, or brain organizations might be more qualified for the undertaking.

Future Headings and Progressions

The Gullible Bayes model keeps on developing with progressions in AI and man-made intelligence. Specialists are investigating ways of loosening up the freedom suspicion and integrating highlight conditions. Making the model more adaptable and precise. Crossover models that consolidate Guileless Bayes with profound learning methods are additionally building up some momentum, offering the smartest scenario imaginable. The effortlessness and interpretability of Gullible Bayes and the strong portrayal of learning abilities of profound brain organizations. As computer-based intelligence keeps on propelling. The Innocent Bayes model is probably going to stay an important instrument, profiting from progressing innovative work endeavors.

Conclusion

The Guileless Bayes model is a major device in the munitions stockpile of man-made reasoning and AI professionals. Its straightforwardness, proficiency, and viability make it a famous decision for an assortment of grouping errands. While the model’s suspicions may not necessarily turn out as expected, its functional execution in numerous applications highlights its worth. Whether you are grouping text, diagnosing infections, or building proposal frameworks. The Gullible Bayes model gives a powerful and dependable arrangement.

Read More: Will a 5G SIM card work in a 4G phone?

FAQs

The Gullible Bayes model is principally utilized for arrangement errands, including spam identification, text grouping, and clinical finding.

It’s designated “guileless” because it accepts that all highlights are free of one another, which is in many cases false in true situations.

The Gaussian Gullible Bayes variation handles nonstop information by expecting the information to follow a typical (Gaussian) dispersion.

The principal benefits are its effortlessness, computational proficiency, and viable execution in numerous down-to-earth applications.

Indeed, even though it is less normal, Gullible Bayes can be utilized for straightforward picture grouping undertakings, ordinarily by regarding pixel values as highlights.